Overview on MPEG-I Scene Description

MPEG-I Scene Description (MPEG-I SD) is a standard developed by MPEG for immersive media. It defines the structure and composition of a 3D scene, referencing and positioning 2D and 3D assets in the scene, and provides information for rendering the scene properly.

The MPEG-I Scene Description standard and glTF (Graphics Library Transmission Format) share a connection in the realm of 3D scenes and models. glTF was chosen as the base scene description technology. Developed by the Khronos Group, glTF is an open standard that integrates media assets using technologies like OpenGL. In essence, glTF provides a way to describe 3D model geometry, appearance, scene hierarchy, and animation1.

MPEG-I Scene Description (ISO/IEC 23090-14) specifies how to reference and position 2D and 3D assets within a scene, enabling proper rendering and creating rich 3D scenes with real-time media delivery and interactivity. Khronos has adopted the MPEG-I Scene Description extensions as defined in https://github.com/KhronosGroup/glTF/blob/main/extensions/README.md.

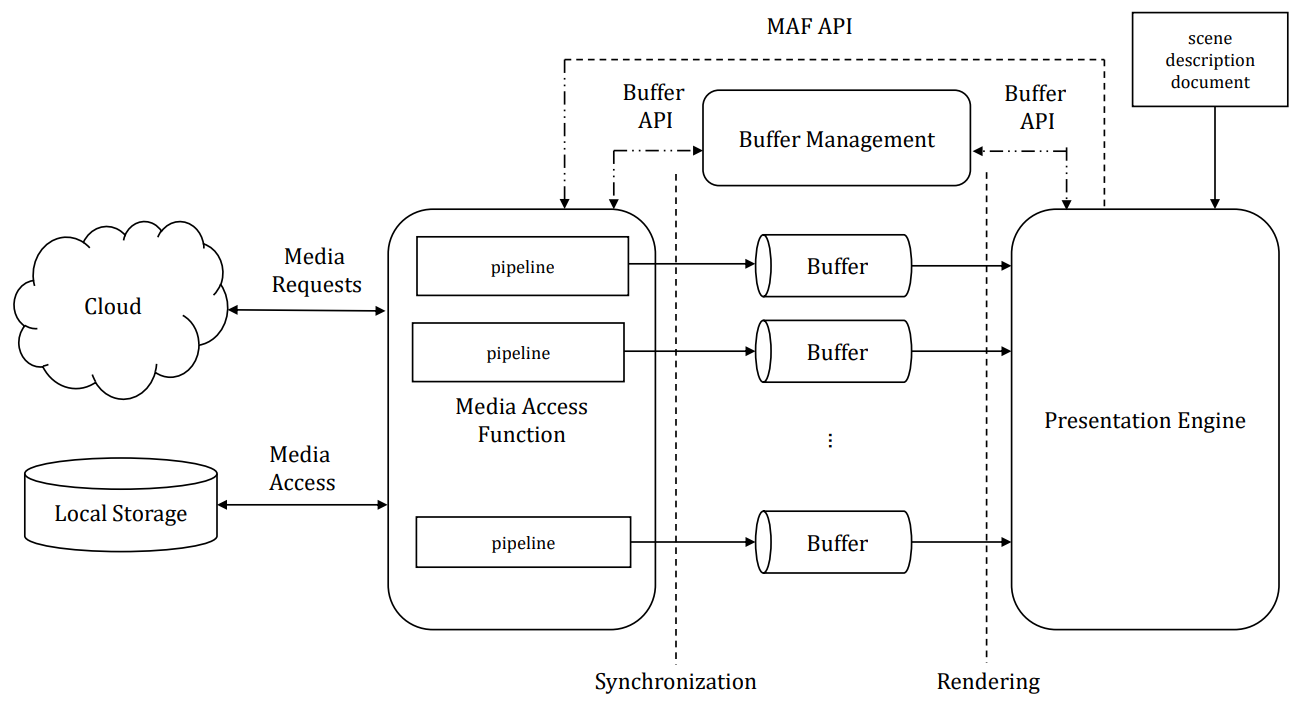

Reference architecture:

MPEG-I SD defined the following reference architecture.

- The Presentation Engine replaces a traditional 2D media player. It is responsible for multi-modal rendering of a scene composed of audiovisuals and haptics media. It also provides information about the viewer’s and object pose to optimize delivery.

- The Media Access Function (MAF) is responsible for the media access and processing functions. Media handling is delegated to the MAF by the Presentation Engine. The MAF constructs a suitable media pipeline to transform media from a delivery format into the formats used during rendering. the MAF uses MIME type and codec parameters to identify support for the media reconstruction and assemble the adequate media pipeline.

- The MAF API is used by the Presentation Engine to request immersive media in the scene.

- The Buffers are fed by the processed media with a format defined through the scene description document in SO/IEC 23090-14

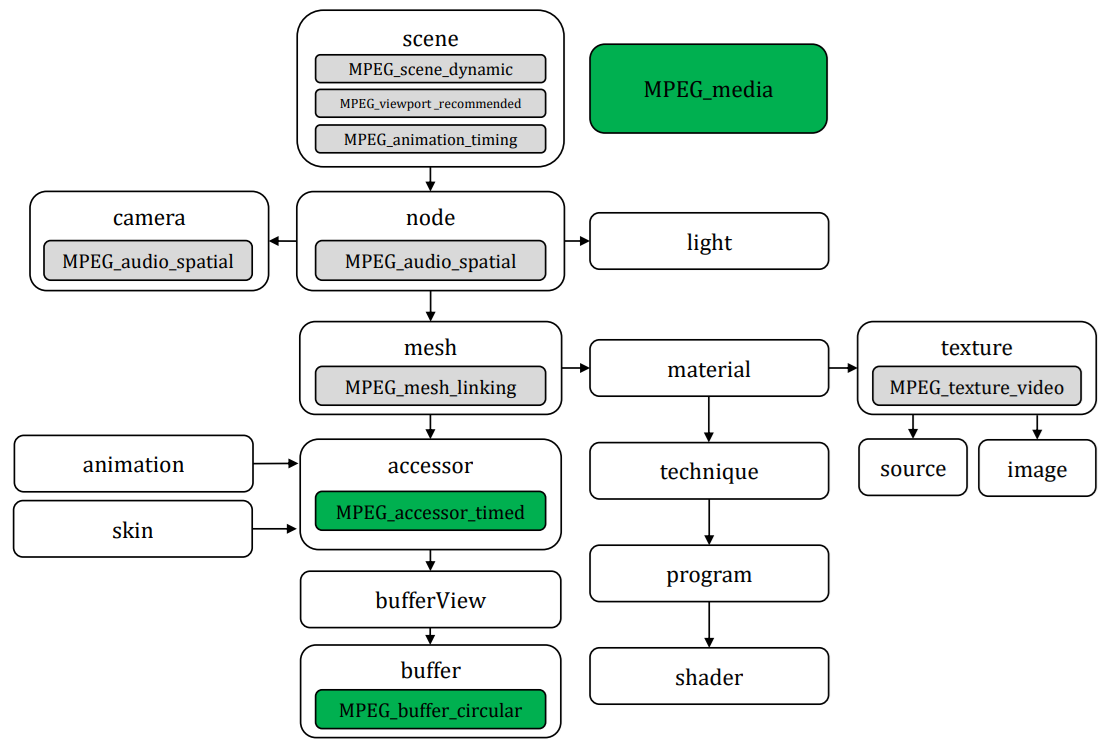

Vendor extensions to Khronos glTF 2.0

A first set of extensions (green in the figure) enable the timed framework including:

- MPEG_media, which enables the referencing of external media streams that are delivered over protocols such as RTP/SRTP, MPEG-DASH, or others

- MPEG_accessor_timed, used in a scene that contains timed media and/or metadata to describe access to the dynamically changing data

- MPEG_buffer_circular, to extend the buffer into a circular buffer

A second group of extensions (gray in the figure) enables the inclusion of dynamic and temporal media including:

- MPEG_texture_video. provides the possibility to link a texture object defined in glTF 2.0 to media and its respective track

- MPEG_audio_spatial, to support spatial audio

- MPEG_mesh_linking, provides the possibility to link a mesh to another mesh in a glTF asset

- MPEG_scene_dynamic, MPEG_viewport_recommended, and MPEG_animation_timing, which indicate that a particular form of timed data is provided to the Presentation Engine during the consumption of the scene and that it shall adapt to the changing information.

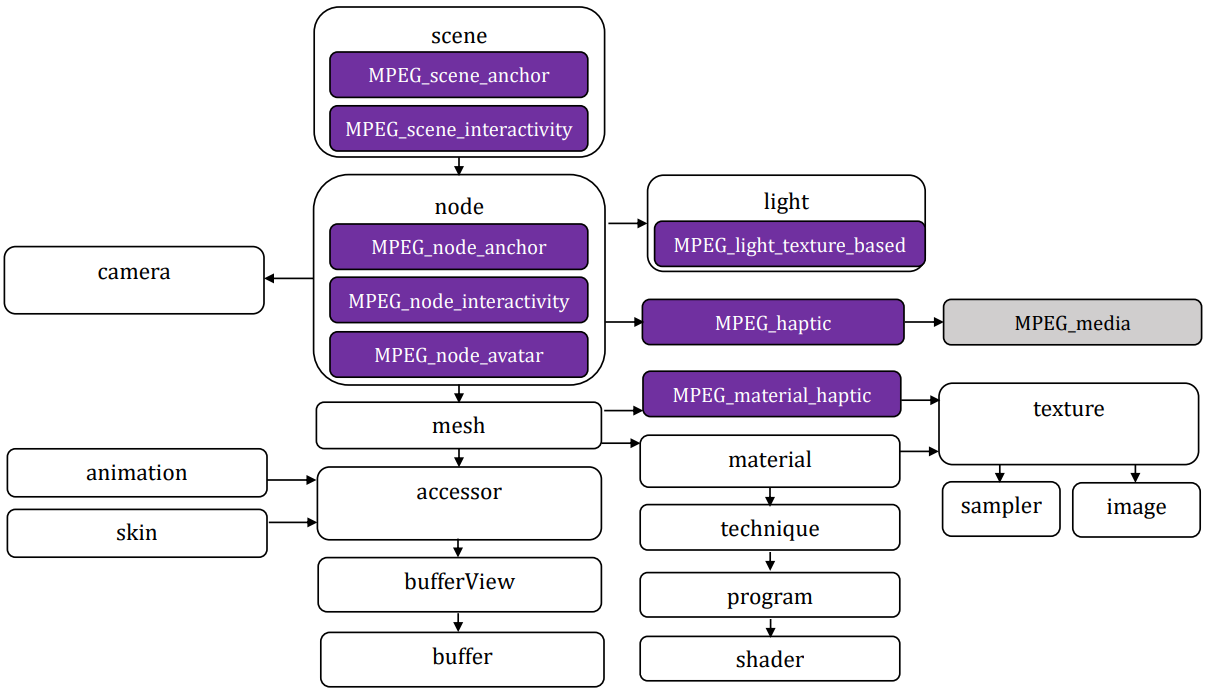

A third group of extensions enables the distribution of real-time immersive and interactive media content including:

- Augmented Reality anchor (MPEG_scene_anchor, MPEG_node_anchor), to support AR experiences where virtual content is inserted into the user’s real environment

- Interactivity (MPEG_scene_interactivity, MPEG_node_interactivity), to describe interactivity at runtime with support for interactions between user and virtual objects and between virtual objects, with triggers based on proximity, visibility, collision or user input.

- Avatar (MPEG_node_avatar), to support the representation of 3D avatars.

- Lighting (MPEG_light), to provide a realistic user experience including shadows and lighting.

- Haptics (MPEG_haptic, MPEG_material_haptic), to support haptics based on the MPEG standard for Coded representation of Haptics ny attaching haptic information to a node or to a mesh.